Subway Tennis

Legend

I rejected at door today so yes fast. Picked up better Omen at bestbuy Saturday for same price.

They're getting good reviews. So the omen you got at best buy was actually the highest spec version now?

I rejected at door today so yes fast. Picked up better Omen at bestbuy Saturday for same price.

Possibly highest spec amd so highest processor. They have nvidia 2070 etc, so not on gpuThey're getting good reviews. So the omen you got at best buy was actually the highest spec version now?

Possibly highest spec amd so highest processor. They have nvidia 2070 etc, so not on gpu

For gaming yes. 4k display might have been nice, but overkill since I have better tv and ipad at 600 nits.And a very good display, too.

I just move SATA stuff over to the M2 SSD since it is 5X faster. I think you have to call Microsoft to get a code to free Windows too and/or pay an upgrade fee.I was looking at server systems to get more cores to keep power use down. It is possible that I will need more CPU resources as well. I'm a bit surprised at how much horsepower these trading applications use as I add more charts to monitor.

The Mac Pro uses Xeons and can address up to 1.5 TB of RAM. I think that 64 - 128 GB will be the most that I need for trading.

Installed the PSU and routed the cables (only three needed). The routing capabilities through the back on the case are quite nice. I'm going to hold off on putting in the SATA3 SSD until I verify that they system boots. I still need to build a boot installer.

Attached a bunch of the header cables and just have several that I'm not sure of and the on/off, reset switches and lights. Staring at the manuals helps.

I just move SATA stuff over to the M2 SSD since it is 5X faster. I think you have to call Microsoft to get a code to free Windows too and/or pay an upgrade fee.

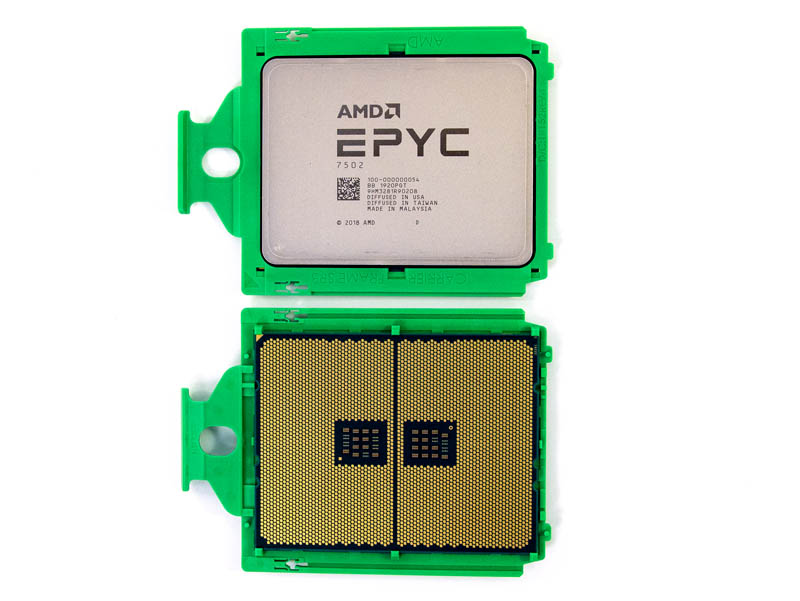

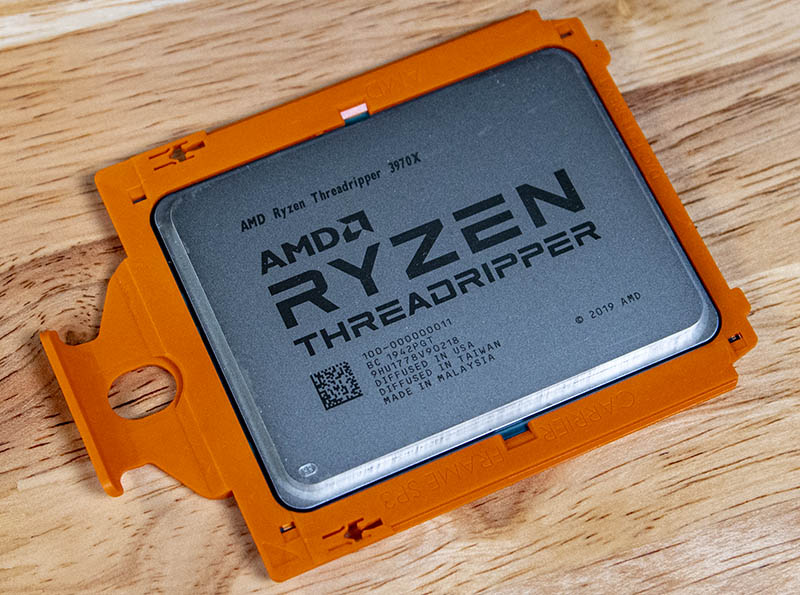

The best Xeon available for the Mac Pro is the W-3275M, which despite the confusing naming convention its a workstation class CPU and not a server class CPU. As for its performances, it is slower than the Threadripper 3970X while consuming more power, but this is something we had talked about before.The Mac Pro uses Xeons and can address up to 1.5 TB of RAM. I think that 64 - 128 GB will be the most that I need for trading.

The best Xeon available for the Mac Pro is the W-3275M, which despite the confusing naming convention its a workstation class CPU and not a server class CPU. As for its performances, it is slower than the Threadripper 3970X while consuming more power, but this is something we had talked about before.

AMD Ryzen Threadripper 3970X Review 32 Cores of Madness

The AMD Ryzen Threadripper 3970X is a 32-core workstation CPU that offers an enormous amount of performance and PCIe bandwidth at a reasonable pricewww.servethehome.com

AMD's Zen 2 architecture is in about every way better than Intel's best HEDT or server platforms. It is why the Mac Pro carters to a very niche audience. Notably people who have little choice but to work with Mac OS professionally, because switching to a Windows or Linux based platform isn't realistic for them for various reasons and really want an update to the trash can. I can understand that.

x86/x64 in general I don't know and don't think so, but x86/x64 on Mac, maybe because of how power constrained some of the Macbooks (and almost every Mac except for the Mac Pro for that matters) are due to insufficient cooling. I think in particular about the Macbook Air, which has essentially no cooling whatsoever. My worries that I had talked about a bit earlier was more about application support. I quite honestly, even if I were a MacOS aficionado, would postpone any purchasing decisions not only after benchmarks arrive but also for about a year. Too many unknowns about software compatibility, and I think gremlins will have to be sorted out and waiting a year isn't particularly pessimistic in this instance.The Apple Silicon MacBook Pros coming out soon are supposedly priced twice the price of Intel MacBook Pros. I will be seriously interested in the new hardware if the performance is actually worth paying double the price of x64.

x86/x64 in general I don't know and don't think so, but x86/x64 on Mac, maybe because of how power constrained some of the Macbooks (and almost every Mac except for the Mac Pro for that matters) are due to insufficient cooling. I think in particular about the Macbook Air, which has essentially no cooling whatsoever. My worries that I had talked about a bit earlier was more about application support. I quite honestly, even if I were a MacOS aficionado, would postpone any purchasing decisions not only after benchmarks arrive but also for about a year. Too many unknowns about software compatibility, and I think gremlins will have to be sorted out and waiting a year isn't particularly pessimistic in this instance.

I'm open to being surprised. Apple's silicon on phones and tablets is genuinely pretty good. We'll see, but patience doesn't hurt.

that's very cool... my idle (ryzen7) is in the 50's with the stock wraith prism. what cooler do you have? though, can't imagine it would make it 30C cooler. guessing intel's run cool?Installing Windows. CPU temp 23 degrees. Not bad overall.

that's very cool... my idle (ryzen7) is in the 50's with the stock wraith prism. what cooler do you have? though, can't imagine it would make it 30C cooler.

will be interesting if you're able to get much from OC'ingThat was the temperature in the BIOS. I hadn't installed Windows yet. Cooler is Arctic eSports Duo 34. I will have to get a program to display temps but I want to install the video card before doing that.

will be interesting if you're able to get much from OC'ing

Seems someone doesn't care about cable management too much.

hehe, i was thinking it, but decided to bite my tongue... i know i've redone my and my kid's machines because i wasn't happy with the rat's nest of cablesSeems someone doesn't care about cable management too much.

what i noticed is that some cables were not being routed via the port closest to where it was ultimately being connected... they mostly are using the top one... on a good note, the cable management in the *back* of your case looks cleanIt’s not done yet. I have to add hardware to both sides. I could tie it, clip the ties, add hardware, and then add ties again but that would seem like a waste.

what i noticed is that some cables were not being routed via the port closest to where it was ultimately being connected... they mostly are using the top one... on a good note, the cable management in the *back* of your case looks clean(because all the cables were pulled into the mobo side

)

yup, i've done it that way too...There are only three cables in the back. The power cable to the top of the motherboard and there's really only one way to route that. The big power cable and the power cable for the front panel. I have to add a SATA3 cable when I put in the SSD. The routing ports came in pretty handy. The other cables I just put together because I just wanted to get the thing up and running. The case fans have very long cables. The left side cables were all together and routed through one of the routing ports. I will have to think about splitting them up though. I'm copying my media files off my old PC right now and that will probably take quite some time and then will copy them to the new system. I need the old system for trading during the day so I don't know if I'll be able to switch things over tonight.

This case does make a lot of things pretty easy.

I don't think that it makes sense to do the final cable stuff until all of the hardware is in.

On the plus side, the case came with a ton of zip-ties. I have a device that's really good for cutting the excess as well.

yup, i've done it that way too...

some tips i learned:

* i mount everything to the mobo that i can: cpu, cpu air cooler (if applicable), mem, gpu, m2

* when testing the system, i don't put the mobo in the case, and power up everything (ie. sitting on the box the mobo came in)

* after successful POST, then i'll install the mobo to the case (usually have to remove the gpu to access at least one of the mobo screws)

* install gpu

* install hdd, ssd

* layout all the cables without plugging anything into mobo (ie. usuing routing ports closest to the where it plugs into the mobo) - it's ok if the *back* is a messcan clean that up later... and hide it behind a non-transparent door

fan cables are particularly tricky because the fan headers can be located all over the mobo

* then lastly plug in everything

caveat, notice which ports on the mobo are going to be a problem when in the case... eg. the sata ports might be a 90 degree plug, some ports might be *under* the gpu (if your gpu is really long), some sata ports might be stacked, etc... try to plug these in ahead of time if you can... (i've had to install/uninstall the mobo/gpu a couple times because of this...

the case is nice, i like the handles on top.

forgot to add, make sure you have enough fan headers for fans (or pump if you go liquid)else preorder a splitter if you don't have one lying around. ask me how i know...

unless you have a nas somewhere to throw the 2tb in, might as well install it... it'd bother me more that it was lying around not being usedGood tips. One other thing is that NVMe drives use the SATA ports (at least on my motherboard). So you have to note which SATA ports are used or shared by the NVMEs for regular SATA3 drives. I might have been better off just getting a second 2 TB NVMe and mounting it on the motherboard. I really don't need the 2 TB in the old system though.

I checked the CPU/Motherboard temps after running Windows for several hours and rebooting and they were 26-29 degrees. So this thing is pretty cool at idle. I need to install OpenHardwareMonitor to see what the temps are like when Windows is running and when it's actually doing something.

unless you have a nas somewhere to throw the 2tb in, might as well install it... it'd bother me more that it was lying around not being usedalso will hate to have to disco everything if later i do need to install the 2tb drive.

eh, i guess you can just throw it into a portable enclosure...

i'm counting 3 fans in your case (so probably that's what the "case header cables" are)... can't see if you have fans on the top of your case.The motherboard has a fair number of fan headers. The CPU cooler has two fans but they have a cable that joins them to one. There are three case header cables and I didn't know what they are for but I suspect that they are for powering fans. There's a slide switch on the front panel and it has three settings for manual fan control. So you can have three out of a possible eight fans controlled manually. I'm just using the default three case fans and these seem more than enough to keep this thing cool. It's only a 65 Watt CPU and the GPU uses either 75 or 100 max.

i went the synology route, but looking back i regret not just building my own for the learning...I was considering building a NAS as my old desktop is the home NAS. I have a couple of 500s and a 120 that aren't in use and in enclosures but I'd rather they be inside a system. I prefer a large, flat, space rather than cobbling together smaller devices. SSD storage is so ridiculously cheap these days. I'll put it in the new system and it will be the home NAS for now. I'm going to keep the old system for a backup but might eventually give it away.

i'm counting 3 fans in your case (so probably that's what the "case header cables" are)... can't see if you have fans on the top of your case.

fan cables these days are very obvious... they have 3 or 4 ports, and "slot" (ie a way to dummy proof on the mobo where you can plug in cooling h/w)

if it's a newish mobo, might be able to also control fan speeds via the mobo.

agreed, 3 fans probably enough.

aio (all in one liquid coolers) are really popular... but like you, i prefer a gigantic air cooler like what you have (i suffer from liquid-leak-phobia which % wise is unwarranted, but still... also i can see/hear if a fan fails, i can't see if a pump fails, but realistically wouldn't notice either way until pc rando reboots). i currently have a wraith prism (stock with ryzen 7 chip), but if i end up OC'ing (not worth it for ryzen7), i'm considering: https://www.icegiantcooling.com/ (partly because i love the science behind it!)The case header cables aren't connected. I suspect that you can connect these to the case fans if you want to using an adapter I think. But I'd rather have the motherboard control the case fans. The case can take up to three on top, three in the front, one in the back and one at the bottom.

The eSports Duo setup is very nice. You have one intake going into the radiator, one fan on the other side pulling from the radiator and the output of that goes into the exhaust fan. I have no interest in liquid cooling but every example build that I've watched with this case has been with liquid cooling so I guess that liquid cooling is really popular these days. I do wish that there were video cards tuned for my usage - you usually have to get something gaming-specific, or something for specific professional workloads.

Run one display off the iGPU 4K! It should more then adequate to handling trading graphics from your software.I've been using the nVidia 1030 for a while and it runs fine serving one 4k with the iGPU serving the other one. The GTX 1050 Ti has been running in the old laptop driving 1 HD monitor and it has been working fine. So it appears to be that the GTX 1050 Ti can't smoothly support 2x4k; at least not at the default settings and with the latest drivers. This is a bit annoying as I wanted to run graphics off of a GPU. I have read various articles on difficulties running 2x4k or 3x4k on video cards and I guess I'm a believer. One solution would be to put in another 1030. They are cheap, use 25 watts and would get the job done. This would take care of 2x4k and I could use integrated for the third display. I really wanted a one-card solution though. I will put the system through my trading paces tomorrow to see how it goes and the thermals. I'm hoping for the best.

Unfortunately I will likely have to make some additional hardware changes.

Run one display off the iGPU 4K!