You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Who's the Best Tennis Player of All Time?

- Thread starter TennisMaverick

- Start date

Timbo's hopeless slice

Hall of Fame

erm, not exactly, our man Fellipe has returned with this (brace yourselves):

and he uses LOOSE instead of LOSE!!

ARRRGGHH!

our Man Fellipe again! said:There is no glory at all nor is deep science.

It is just an application of well known algorithm for ranking nodes in a network.

The interpretation of the algorithm is fundamentally different from what

reported by reportes. They oversimplified the technique and this unfortunately

has caused many missunderstandings.

The approach is very simple. Each tennis player initially owns the same amount

of credit or prestige. Let say just a unit. This assumption is quite reasonable because

in absence of information (who won against whom, etc..) there is no possibility

to say who is better.

Now if player A looses against player B, A gives his total credit to B.

When you put more information (many matches) then the credit starts to flow

among players. This diffusive process of credit, always reaches a stationary state, which

means that the credit flowing in and flowing out from each player is the same.

PageRank just measures the ammount of credit that you observe in the

stationary state. Notice that, the score of one player is not only related to his personal performances, but

also to those of his opponents, those of the opponents of his opponents, and so on...

When tournaments are considered as independent events, the equation of pagerank reduce

to a very simple assignation of scores (similar to the one used by ATP, if I am not wrong).

Basically, players loosing at first round receive 1 point, players loosing at second round

receive 2 points, players loosing at third round receive 4 points, .... points are doubled at each round.

Grand Slam winners take 128 points, finalist 64. In tournaments with less rounds, like ATP master series,

the winner take 64 points.

In my algorithm, tournaments are not treated as independent, but matches among the same opponents

are aggregated over many tournaments. This means that winning a first round against the winner of Wimbledon, even

if in a minor tournament, is more important than winning at the same round and tournament against

a player who have lost at the first round of Wimbledon.

Ok, I do not want to annoying you with other details.

I just would like to state that there is not personal input in the algorithm.

There is only the assumption that matches represent basic contacts between players.

Notice also that you can construct different networks by imposing filters:

for example, consider only tournaments played on grass, only tournaments played in year 2000, only

Grand Slams, etc...

and he uses LOOSE instead of LOSE!!

ARRRGGHH!

veroniquem

Bionic Poster

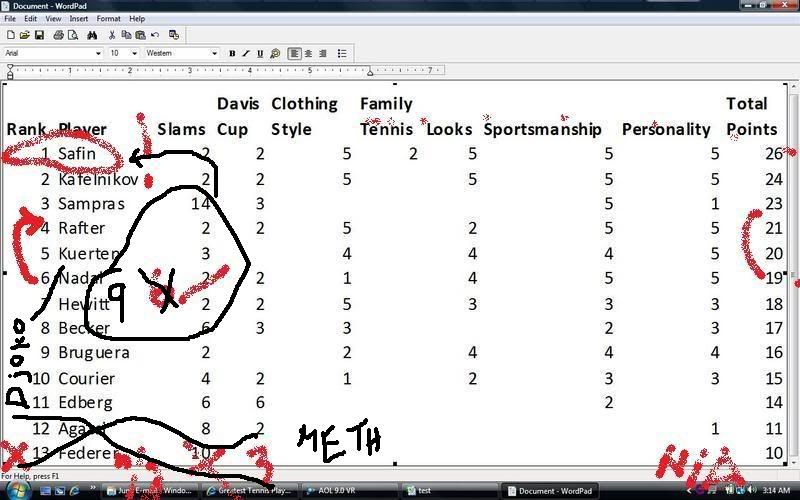

Oh thanks for bumping it. I loved those charts. So much fun. I am totally nostalgic of that time when *******s hadn't become pompous and overbearing yet and people could have some good innocent fun around hereWe did much better study some years ago, it's

1. Safin

2. Nadal

3. Sampras

4. Kafelnikov

Sorry if it hasn't been updated. Djokovic should be in top 10.

SoBad

G.O.A.T.

Oh thanks for bumping it. I loved those charts. So much fun. I am totally nostalgic of that time when *******s hadn't become pompous and overbearing yet and people could have some good innocent fun around here

Thanks, I wish I still had access to the original scientific research files, but actually not much has changed. The only mover up the chart has been Nadal, jumping from No. 6 to No. 2 before Sampras. Djokovic also needs to be entered into the picture now that he's not a one-slam wonder anymore, but the original data files need to be reconstructed in order to update the findings.

veroniquem

Bionic Poster

I'm all for the updates, vamos!

Manus Domini

Hall of Fame

erm, not exactly, our man Fellipe has returned with this (brace yourselves):

and he uses LOOSE instead of LOSE!!

ARRRGGHH!

His reply to me was to be less formal lol

SoBad

G.O.A.T.

TennisMaverick

Banned

I meant about this quote:

You actually believe those algorithms weren't based on personal opinion?

Personally, insults actually involving ADHD, Diabetes, Schizophrenia, cancer, mental retardation, etc. truly **** me off. Jokes I can deal with, but serious comments are infuriating. Shoot about bias all you want, I just want a para of rant on that subject

and I am certainly not emo

Comments regarding how the algorithm was chosen, was not what my retort was about. It was about the definition of algorithm, quite obviously.

Secondly, do you UNDERSTAND the difference between ending a sentence with a period, as opposed to a question mark?

"can you not read, have a coding issue, or are you afflicted with ADD??"

Last edited:

D

Deleted member 120290

Guest

2 observations: 1. How can Mark Phillipoussis not make this list? 2. Northwestern just plummeted in US News & World Ranking of Universities or is it a City College now?

Timbo's hopeless slice

Hall of Fame

I just want to say to Jimbo: 'congratulations on your award, but BEYONCE WAS THE BEST TENNIS PLAYER OF ALL TIME!!!!'

Bud

Bionic Poster

erm, that'll teach me, then..

He basically admitted that his algorithm is crap and contains a HUGE number of shortcomings :-?

When creating any type of algorithm to model data and then convert that to useful information... you must look at the results, compare them to reality (and common sense) and then continue tweaking the model. Weather (specifically hurricane) modeling and prediction is a perfect example. Even after years of tweaking those models by NOAA, there are always a couple that are completely off.

It's obvious that Google's algorithm for search results is not proper for also placing tennis players in a list of who is greatest of all time.

- -

Did the author of this study also state that because Laver was never #1 (according to the ATP his highest rank was #3 in the open era), he failed to make the list? If that's true then why are other guys on the list that peaked at say #5?

Last edited:

Bud

Bionic Poster

Comparison of Open Era Stats (Dibbs vs Laver)

Some background: Tennis Open Era began April 28th, 1968. First Open Era GS tournament was the 1968 French Open.

- -

Eddie Dibbs: #18 on the list

Titles in OE: 22

GS Titles: never made it beyond two SF appearances

Highest Open Era ranking: #5

Versus top 10 in OE: 21/51 (29%)

Career winning % in OE: 584/252 (70%)

Rod Laver: didn't make the list

Titles in OE: 42

GS titles: 5 (won Wimby in 1968 and all majors in 1969)

Highest Open Era ranking: #3

Versus top 10 in OE: 8/20 (29%)

Career winning % in OE: 412/106 (80%)

Laver's numbers are either equal or greater in every single category. So, why didn't he make the list

This is the common sense part of the process the author of this study should have realized and then tweaked the model, accordingly.

Some background: Tennis Open Era began April 28th, 1968. First Open Era GS tournament was the 1968 French Open.

- -

Eddie Dibbs: #18 on the list

Titles in OE: 22

GS Titles: never made it beyond two SF appearances

Highest Open Era ranking: #5

Versus top 10 in OE: 21/51 (29%)

Career winning % in OE: 584/252 (70%)

Rod Laver: didn't make the list

Titles in OE: 42

GS titles: 5 (won Wimby in 1968 and all majors in 1969)

Highest Open Era ranking: #3

Versus top 10 in OE: 8/20 (29%)

Career winning % in OE: 412/106 (80%)

Laver's numbers are either equal or greater in every single category. So, why didn't he make the list

This is the common sense part of the process the author of this study should have realized and then tweaked the model, accordingly.

Last edited:

Timbo's hopeless slice

Hall of Fame

lol, yes. he's sent me both those long winded emails and still doesn't really understand where i'm coming from...

Bud

Bionic Poster

lol, yes. he's sent me both those long winded emails and still doesn't really understand where i'm coming from...

It's astounding that he actually published this in a scientific journal when the algorithm/model is so obviously flawed and can not be applied to ranking great tennis players. He should have known by the results and then analyzing them using common sense, that something was way off.

It would be akin to using the Google algorithm/model (he references) to predict the weather.

Last edited:

VGP

Legend

I find this thead interesting.

I'm surprised the author of the paper actually responded to Timbo's hopeless slice. I'm curious to know your background.

It's a bit of a biased view to report Filippo Radicchi's response to your emails without sharing the content of what you sent.

Radicchi does make concessions in the discussion about penalizing younger players:

....and that the inclusion of players who's careers were mainly in the Open Era.

and he explained in his email of Laver being penalized for being a "transitional" player.

To knock the guy for his spelling is immature. By his responses and grammar, it looks like English isn't his first language.

I am a scientist and reading journal articles is my business and the guy comes off professional and allows the data to be the data.

He seems aware of the limitations of his study and is just presenting it as a useful, yet innocuous finding. Does it really matter in the grand scheme of things who the top players are? It's not like he's trying to commit cyber-terrorism to hack government networks or sway public opinion on which stocks to buy to affect global markets.

Sorry Bud, I normally like your posts, but this shows me that you are not a scientist. You can't fiddle with your data to make it come out as you like. In this case, it is what it is based on the algorithm. What you can do is just interpret what you get and come up with conclusions as to why you think it comes out as such, which Radicchi does.

If anything it reveals what's being discussed as the current "weak era/strong era" argument. I think that's why Federer ranks so low on the all-time list. He was beating everyone (not to mention that a lot of top players retired or were injured between 2003-2006) affecting his own "prestige" score. He just didn't rack up the wins against what would be perceived as "quality" players. Then, as a follow-up it affects Nadal's ranking because most of his top-10 winning percentage (as many people have pointed is higher than others) is mostly against Federer.

The table list that sticks out to me is the Prestige column of Table 2. I'd agree that the prestige players listed reflects their performance for the given years despite support, but not agreement with the ATP/ITF rankings.

I'm surprised the author of the paper actually responded to Timbo's hopeless slice. I'm curious to know your background.

It's a bit of a biased view to report Filippo Radicchi's response to your emails without sharing the content of what you sent.

Radicchi does make concessions in the discussion about penalizing younger players:

In general, players still in activity are penalized with respect to those who already ended their career only for incompleteness of information (i.e., they did not play all matches of their career) and not because of an intrinsic bias of the system.

....and that the inclusion of players who's careers were mainly in the Open Era.

Players do not need to be classified since everybody has the opportunity to participate to every tournament.

and he explained in his email of Laver being penalized for being a "transitional" player.

To knock the guy for his spelling is immature. By his responses and grammar, it looks like English isn't his first language.

I am a scientist and reading journal articles is my business and the guy comes off professional and allows the data to be the data.

He seems aware of the limitations of his study and is just presenting it as a useful, yet innocuous finding. Does it really matter in the grand scheme of things who the top players are? It's not like he's trying to commit cyber-terrorism to hack government networks or sway public opinion on which stocks to buy to affect global markets.

It's astounding that he actually published this in a scientific journal when the algorithm/model is so obviously flawed and can not be applied to ranking great tennis players. He should have known by the results and then analyzing them using common sense, that something was way off.

It would be akin to using the Google algorithm/model (he references) to predict the weather.

Sorry Bud, I normally like your posts, but this shows me that you are not a scientist. You can't fiddle with your data to make it come out as you like. In this case, it is what it is based on the algorithm. What you can do is just interpret what you get and come up with conclusions as to why you think it comes out as such, which Radicchi does.

If anything it reveals what's being discussed as the current "weak era/strong era" argument. I think that's why Federer ranks so low on the all-time list. He was beating everyone (not to mention that a lot of top players retired or were injured between 2003-2006) affecting his own "prestige" score. He just didn't rack up the wins against what would be perceived as "quality" players. Then, as a follow-up it affects Nadal's ranking because most of his top-10 winning percentage (as many people have pointed is higher than others) is mostly against Federer.

The table list that sticks out to me is the Prestige column of Table 2. I'd agree that the prestige players listed reflects their performance for the given years despite support, but not agreement with the ATP/ITF rankings.

Manus Domini

Hall of Fame

Comments regarding how the algorithm was chosen, was not what my retort was about. It was about the definition of algorithm, quite obviously.

Secondly, do you UNDERSTAND the difference between ending a sentence with a period, as opposed to a question mark?

"can you not read, have a coding issue, or are you afflicted with ADD??"

I understand the difference. That question YOU asked was highly insulting to me. ADHD/ADD have nothing to do with learning disabilities; to claim that I cannot read because I have ADHD is demeaning and prejudiced because you may know one or two cases. I understand the difference better than you do, don't try running around it.

Bud

Bionic Poster

Sorry Bud, I normally like your posts, but this shows me that you are not a scientist. You can't fiddle with your data to make it come out as you like. In this case, it is what it is based on the algorithm. What you can do is just interpret what you get and come up with conclusions as to why you think it comes out as such, which Radicchi does.

If anything it reveals what's being discussed as the current "weak era/strong era" argument. I think that's why Federer ranks so low on the all-time list. He was beating everyone (not to mention that a lot of top players retired or were injured between 2003-2006) affecting his own "prestige" score. He just didn't rack up the wins against what would be perceived as "quality" players. Then, as a follow-up it affects Nadal's ranking because most of his top-10 winning percentage (as many people have pointed is higher than others) is mostly against Federer.

The table list that sticks out to me is the Prestige column of Table 2. I'd agree that the prestige players listed reflects their performance for the given years despite support, but not agreement with the ATP/ITF rankings.

It's not fiddling with the data. It's FIDDLING WITH THE MODEL that spits out the information! Have you once modeled data in your lifetime? Reading journals is great but modeling data is something different. Modeling data requires constant tweaking of the model as you gradually bring it in line with observed phenomena. One observed phenomenon is Laver belongs on this list way ahead of some of these others guys who never did anything truly significant during their careers. I even compared Laver's OE career to Dibbs' OE career earlier? Why not comment on that? You conspicuously left that out.

If you truly agree with his results, IMO you've a bit of a screw loose and I seriously question if you work with modeling data. It's blatantly obvious that both the algorithm and the assumptions he's using are filled with HUGE holes. Why even create and then publish something like this when it has no bearing in reality? ◄◄ Did he create this so he can now brag about being a published author... regardless of the fact the results are comical?

He seems aware of the limitations of his study and is just presenting it as a useful, yet innocuous finding. Does it really matter in the grand scheme of things who the top players are? It's not like he's trying to commit cyber-terrorism to hack government networks or sway public opinion on which stocks to buy to affect global markets.

Why are you then making excuses for his results by comparing it to cyber-terrorism, stocks or the global marketplace? That is really taking a weak position. BTW, these results show absolutely nothing about the current weak/strong era argument. The best indicator about that, IMO, is each player's career record versus their top 10 peers.

How do you reconcile this:

Eddie Dibbs: #18 on the list

Titles in OE: 22

GS Titles: never made it beyond two SF appearances

Highest Open Era ranking: #5

Versus top 10 in OE: 21/51 (29%)

Career winning % in OE: 584/252 (70%)

Rod Laver: didn't make the list

Titles in OE: 42

GS titles: 5 (won all majors in 1969 + Wimby 1968)

Highest Open Era ranking: #3

Versus top 10 in OE: 8/20 (29%)

Career winning % in OE: 412/106 (80%)

Last edited:

Rabbit

G.O.A.T.

Eddie Dibbs

Titles: 22

GS Titles: never made it beyond two SF appearances

Highest rank: #5

Versus top 10: 21/51 or 29% (very low)

Career winning %: 584/252 or 70%

Sure, he also deserves to be above Nadal on the list... lol!

Yeah, this points out the flaw. I was definitely a Fast Eddie Dibbs fan back in the day. For those who don't know the name, Dibbs was one of the "Bagel Twins" the other being Harold Solomon. Both guys were around 5'6" tall and both hugged the baseline. Dibbs had a bit more firepower and variety than Solomon, but was in the same mold. Solomon is Jewish and Dibbs isn't, but according to Collins, wanted to be because Solomon is.

Solomon purused the big tournaments and big titles. Dibbs, on the other hand, was a professional. By professional, I mean that he decided to win as much money as he could. Ergo, Dibbs was the leading prize money winner above Connors, Borg, et al for a period of time. How did he do it? He played smaller 2 - 3 day events, basically barnstorming the country like they did in the dawn of professional tennis.

Dibbs was no slouch, however. He played the Mastesr in MSG several times and did play the slams. Although I think his heart was never in any of them save possibly the French. Dibbs was also one of Connors' favorite folks because of his sense of humor.

The last year they had the great American claycourt circuit, just prior to Flushing Meadows, Dibbs won a warm up tournament on green clay. I remember his talking about the USTA moving the national championships to "parking lots" a snip about the move to concrete.

Dibbs was a great guy and a modernish version of Bobby Riggs in a lot of ways.

Oh yeah, the point of the rambling history is that the guy who placed Dibbs ahead of Nadal probably took into account Dibbs overall record and winning years when he played smaller and more money tournaments.

Bud

Bionic Poster

Yeah, this points out the flaw.

Check out post #62. I transcribed their OE records. Laver matches or exceeds Dibbs in every single objective category concerning pertinent and measurable results. Yet, Dibbs ends up #18 while Laver ends up missing the list?! :lol:

Here is it again:

Eddie Dibbs: #18 on the list

Titles in OE: 22

GS Titles: never made it beyond two SF appearances

Highest Open Era ranking: #5

Versus top 10 in OE: 21/51 (29%)

Career winning % in OE: 584/252 (70%)

Rod Laver: didn't make the list

Titles in OE: 42

GS titles: 5 (won all majors in 1969 + Wimby 1968)

Highest Open Era ranking: #3

Versus top 10 in OE: 8/20 (29%)

Career winning % in OE: 412/106 (80%)

Last edited:

li0scc0

Hall of Fame

Using an algorithm is a meaningless endeavor.

A model attempts to predict a known and objective dependent variable using known independent variables. Using past results to predict future results.

In this case, we are predicting a subjective dependent variable. That in and of itself makes the concept flawed.

A model attempts to predict a known and objective dependent variable using known independent variables. Using past results to predict future results.

In this case, we are predicting a subjective dependent variable. That in and of itself makes the concept flawed.

mandy01

G.O.A.T.

Bud

Bionic Poster

Using an algorithm is a meaningless endeavor.

A model attempts to predict a known and objective dependent variable using known independent variables. Using past results to predict future results.

In this case, we are predicting a subjective dependent variable. That in and of itself makes the concept flawed.

Agreed. When utilized for ranking the great tennis players throughout history, a simple Google algorithm, with faulty assumptions (inputs) will yield crap as we can clearly see :lol:

For this to truly be a study worthy of any respect, the author should have created a model with multiple inputs of varying weights and importance. Those inputs would then be tweaked and massaged based on the results (output.) Then, the study should have been peer-reviewed, vetted and potentially revised (which it obviously wasn't).

Another glaring error is using the OE stats only. A good scientist would have looked at stats/results pre-open era and determined a method of incorporating those into the model.

This is poorly applied and lazy science, IMO... and the results verify that.

Last edited:

Rabbit

G.O.A.T.

I gotta take that video down....I'm much more mature now....

VGP

Legend

Bud, I agree with you that Laver should have made the list based on the criteria you posted. You don't even have to compare him to Eddie Dibbs, Ken Rosewall is on the list.

There must have been a cut-off for pre-open era players who played in the open era. Or that Rosewall had more interaction (usable data) with the players who were prominent at the start of the open era. But your numbers do reflect the need for inclusion of Laver.

I think it would be hard to model pre-open data. The not everything was well kept track of.

As for the guy doing this to "brag" that he's a published author, he seems to have credentials that far exceed this publication. I think this was a bit of fun for him and his results are rubbing the pundits the wrong way. If you check his website, the guy just likes to crunch numbers.....

Like I mentioned in my previous post, I think the take-home message of the paper shouldn't be the the title of Table 1, "Top 30 players in the history of tennis." That's just bad form. It should be the Prestige ranking in Table 2, "Best players of the year." Guys on that list that show up 3 or more times most people would agree are good players over an extended period of time.

Although, McEnroe only shows up once in that column.....and Boris Becker not at all.

There must have been a cut-off for pre-open era players who played in the open era. Or that Rosewall had more interaction (usable data) with the players who were prominent at the start of the open era. But your numbers do reflect the need for inclusion of Laver.

I think it would be hard to model pre-open data. The not everything was well kept track of.

As for the guy doing this to "brag" that he's a published author, he seems to have credentials that far exceed this publication. I think this was a bit of fun for him and his results are rubbing the pundits the wrong way. If you check his website, the guy just likes to crunch numbers.....

Like I mentioned in my previous post, I think the take-home message of the paper shouldn't be the the title of Table 1, "Top 30 players in the history of tennis." That's just bad form. It should be the Prestige ranking in Table 2, "Best players of the year." Guys on that list that show up 3 or more times most people would agree are good players over an extended period of time.

Although, McEnroe only shows up once in that column.....and Boris Becker not at all.

Bud

Bionic Poster

Bud, I agree with you that Laver should have made the list based on the criteria you posted. You don't even have to compare him to Eddie Dibbs, Ken Rosewall is on the list.

There must have been a cut-off for pre-open era players who played in the open era. Or that Rosewall had more interaction (usable data) with the players who were prominent at the start of the open era. But your numbers do reflect the need for inclusion of Laver.

I think it would be hard to model pre-open data. The not everything was well kept track of.

As for the guy doing this to "brag" that he's a published author, he seems to have credentials that far exceed this publication. I think this was a bit of fun for him and his results are rubbing the pundits the wrong way. If you check his website, the guy just likes to crunch numbers.....

Like I mentioned in my previous post, I think the take-home message of the paper shouldn't be the the title of Table 1, "Top 30 players in the history of tennis." That's just bad form. It should be the Prestige ranking in Table 2, "Best players of the year." Guys on that list that show up 3 or more times most people would agree are good players over an extended period of time.

Although, McEnroe only shows up once in that column.....and Boris Becker not at all.

My point is... there are HUGE flaws in his inputs and/or methods, based on the results. He should have realized this prior to publishing the study. He also should have had a few sets of eyes review it so he didn't end up looking like a fool.

- - - -

Here's the link to the study for those who'd like to read it:

http://www.plosone.org/article/info%3Adoi%2F10.1371%2Fjournal.pone.0017249

It was apparently peer-reviewed as well (sorry have to chuckle reading this).

Last edited:

Fugazi

Professional

Yeah but the list also doesn't seem to account for the importance of tournaments, which is a big mistake. The slams are what counts, the rest is just foreplay.Apparently you did not read this bit:

"Radicchi ran an algorithm, similar to the one used by Google to rank Web pages, on digital data from hundreds of thousands of matches. The data was pulled from the Association of Tennis Professionals website. He quantified the importance of players and ranked them by a “tennis prestige” score. This score is determined by a player’s competitiveness, the quality of his performance and number of victories."

So the list is based on scientific research, NOT ON PERSONAL OPINION!!!!!!!!!!!!!!

Fugazi

Professional

Now that is a good post. I agree completely here.Best player of all time is opinion as there is no established criteria to use as a measurement of how great a player is or if they are the best. The only people who this algorithm hold merit to are the ones who actually created the algorithm as they designed it according to their own criteria for the best player of all time.

Bud

Bionic Poster

Quotes from the Study

Here are some gems from the author:

"The results presented here indicate once more that ranking techniques based on networks outperform traditional methods."

Really... once more, huh

When was this type of study performed in the past on ATP professionals?

- -

"The prestige score is in fact more accurate and has higher predictive power than well established ranking schemes adopted in professional tennis."

Where is there a single shred of evidence to support this statement

- -

"Prestige rank represents only a novel method with a different spirit and may be used to corroborate the accuracy of other well established ranking techniques."

The results are way out in left field and have no bearing in reality when we compare things like total weeks at #1, GS titles, % of wins versus a player's top 10 peers, % of total wins to total losses, etc. So, how could these results be used to "corroborate the accuracy of other well established ranking techniques." (which the author already called inferior to his technique).

Here are some gems from the author:

"The results presented here indicate once more that ranking techniques based on networks outperform traditional methods."

Really... once more, huh

When was this type of study performed in the past on ATP professionals?

- -

"The prestige score is in fact more accurate and has higher predictive power than well established ranking schemes adopted in professional tennis."

Where is there a single shred of evidence to support this statement

- -

"Prestige rank represents only a novel method with a different spirit and may be used to corroborate the accuracy of other well established ranking techniques."

The results are way out in left field and have no bearing in reality when we compare things like total weeks at #1, GS titles, % of wins versus a player's top 10 peers, % of total wins to total losses, etc. So, how could these results be used to "corroborate the accuracy of other well established ranking techniques." (which the author already called inferior to his technique).

Last edited:

Fugazi

Professional

The bias towards total matches played should have been dealt with...The original can be found at PLoSOne (Public Library of Science)

This link explains their methodology in detail, not that it makes a whole lot of difference to the flaws already mentioned. Reading it show there is a significant bias towards total matches played, not quality of average match. This means that highly dominant players like Federer who achieved their slams in a shorter time than their peers (even to 14 slams he was years ahead of Sampras) are effectively penalised.

The research author's email is: f.radicchi@gmail.com

Filippo Radicchi - Department of Chemical and Biological Engineering, Northwestern University, Evanston, Illinois, USA.

Fugazi

Professional

Total number of matches played is your explanation. The percentages don't seem too important, just the absolute numbers. It's a big factor in the study, apparently. Big mistake that favored Jimbo.Check out post #62. I transcribed their OE records. Laver matches or exceeds Dibbs in every single objective category concerning pertinent and measurable results. Yet, Dibbs ends up #18 while Laver ends up missing the list?! :lol:

Here is it again:

Eddie Dibbs: #18 on the list

Titles in OE: 22

GS Titles: never made it beyond two SF appearances

Highest Open Era ranking: #5

Versus top 10 in OE: 21/51 (29%)

Career winning % in OE: 584/252 (70%)

Rod Laver: didn't make the list

Titles in OE: 42

GS titles: 4 (won all majors in 1969)

Highest Open Era ranking: #3

Versus top 10 in OE: 8/20 (29%)

Career winning % in OE: 412/106 (80%)

Bud

Bionic Poster

Total number of matches played is your explanation. The percentages don't seem too important, just the absolute numbers. It's a big factor in the study, apparently. Big mistake that favored Jimbo.

The author completely discounted Laver's 4 Open Era GS titles in 1969! He didn't even make the freakin' list :lol:

How is that explained away in reference to his algorithm?

It appears the most important input in his algorithm is the total number of matches played... regardless of the quality of opponent or the win/loss ratio. Simply ludicrous.

Last edited:

Fugazi

Professional

Yeah the slams don't seem to have more importance than the rest, which is a huge flaw.The author completely discounted Laver's 4 Open Era GS titles in 1969! He didn't even make the freakin' list :lol:

How is that explained away in reference to his algorithm?

VGP

Legend

I think he was trying to deviate from the point system used by the ATP. Trying to develop a formula that reflects beating Edberg in the second round of a tournament carries more weight than Donald Young.

In the ATP ranking point system, winning a second round match of a 500 event is the same no matter who you play. Radicchi is trying allow for players to develop prestige depending on who they beat during their career and how they stack up against someone else depending on who they beat.

This biases players with long careers who have success against top level players. Hence the result of Jimmy Connors being the "best."

The setup based on open era data penalizes players who were active at the transition between pre-open and open tennis, i.e. Laver.

It also penalizes players who are currently active and are still quite young. They haven't accrued enough data against top level players to develop enough prestige, i.e. Nadal. Despite him having 9 major titles.

Bud, I think Radicchi ignores Laver in the analysis on purpose but he does acknowledge him saying:

But, if he were included in the analysis based on his open era results he might fall somewhere between #10 and #15. A lot of Laver's success was in pre-open amateur tennis and on the pro circuit. That division is too murky to include since there wasn't the opportunity for all players to interact with each other (open tennis).

Then, he'd have to change the whole tone of his paper. Which I think would be more appropriate. It would then have to read, "the best players of open era tennis." With the caveat of understanding that current players' careers aren't yet over and their data acquisition is still in flux.

I like that he's trying to come up with a different means of analysis. His way takes out the weeks at #1 measure because that's based on tour points and not who you beat. For example, Del Potro shot up the rankings a couple of years back by playing and winning a string of 250/500 level events without meeting the top guys.

Although Rios getting to #1 without winning a major title is reflected in the rankings at the time and Radicchi's prestige score for 1998. Since Rios was beating quality players.

I guess taking out major titles as criteria is good because that minimizes the "cakewalk" draw argument.

And wins against top 10 peers again feeds into the weak/strong era argument because people bring up the complexion of the top 10 of 1992 and the top 10 of 2008 having fewer "accomplished" players in the latter.

Again with most science, it depends on how you look at the data and how you interpret the results.

In the ATP ranking point system, winning a second round match of a 500 event is the same no matter who you play. Radicchi is trying allow for players to develop prestige depending on who they beat during their career and how they stack up against someone else depending on who they beat.

This biases players with long careers who have success against top level players. Hence the result of Jimmy Connors being the "best."

The setup based on open era data penalizes players who were active at the transition between pre-open and open tennis, i.e. Laver.

It also penalizes players who are currently active and are still quite young. They haven't accrued enough data against top level players to develop enough prestige, i.e. Nadal. Despite him having 9 major titles.

Bud, I think Radicchi ignores Laver in the analysis on purpose but he does acknowledge him saying:

We identify Rod Laver as the best tennis player between 1968 and 1971, period in which no ATP ranking was still established.

But, if he were included in the analysis based on his open era results he might fall somewhere between #10 and #15. A lot of Laver's success was in pre-open amateur tennis and on the pro circuit. That division is too murky to include since there wasn't the opportunity for all players to interact with each other (open tennis).

Then, he'd have to change the whole tone of his paper. Which I think would be more appropriate. It would then have to read, "the best players of open era tennis." With the caveat of understanding that current players' careers aren't yet over and their data acquisition is still in flux.

The results are way out in left field and have no bearing in reality when we compare things like total weeks at #1, GS titles, % of wins versus a player's top 10 peers, % of total wins to total losses, etc. So, how could these results be used to "corroborate the accuracy of other well established ranking techniques." (which the author already called inferior to his technique).

I like that he's trying to come up with a different means of analysis. His way takes out the weeks at #1 measure because that's based on tour points and not who you beat. For example, Del Potro shot up the rankings a couple of years back by playing and winning a string of 250/500 level events without meeting the top guys.

Although Rios getting to #1 without winning a major title is reflected in the rankings at the time and Radicchi's prestige score for 1998. Since Rios was beating quality players.

I guess taking out major titles as criteria is good because that minimizes the "cakewalk" draw argument.

And wins against top 10 peers again feeds into the weak/strong era argument because people bring up the complexion of the top 10 of 1992 and the top 10 of 2008 having fewer "accomplished" players in the latter.

Again with most science, it depends on how you look at the data and how you interpret the results.

Bud

Bionic Poster

I like that he's trying to come up with a different means of analysis. His way takes out the weeks at #1 measure because that's based on tour points and not who you beat. For example, Del Potro shot up the rankings a couple of years back by playing and winning a string of 250/500 level events without meeting the top guys.

Although Rios getting to #1 without winning a major title is reflected in the rankings at the time and Radicchi's prestige score for 1998. Since Rios was beating quality players.

I guess taking out major titles as criteria is good because that minimizes the "cakewalk" draw argument.

And wins against top 10 peers again feeds into the weak/strong era argument because people bring up the complexion of the top 10 of 1992 and the top 10 of 2008 having fewer "accomplished" players in the latter.

Again with most science, it depends on how you look at the data and how you interpret the results.

However, I listed numerous criteria in my response and you chose to pick out just one (which also is probably the least significant). My argument was an aggregate of a number of results... not simply total weeks at #1.

What about the declarations he stated and I quoted in post #80? I'll repeat them below.

- - -

Quotes from the author:

"The results presented here indicate once more that ranking techniques based on networks outperform traditional methods."

"The prestige score is in fact more accurate and has higher predictive power than well established ranking schemes adopted in professional tennis."

"Prestige rank represents only a novel method with a different spirit and may be used to corroborate the accuracy of other well established ranking techniques."

^^ Where is the author's evidence to bolster these statements?

Last edited:

Sid_Vicious

G.O.A.T.

Nadal at 24? Says a lot about this study....

veroniquem

Bionic Poster

Yes, especially when Nadal is second in winning % overall in open era and has a winning record against all main rivals including 14 wins over Fed.

Last edited:

Bobby Jr

G.O.A.T.

You hit the nail on the head here.The results are way out in left field and have no bearing in reality when we compare things like total weeks at #1, GS titles, % of wins versus a player's top 10 peers, % of total wins to total losses, etc. ...

Studies which aim to show a ranking of 'X'-ness you'd think would at least show correlative trends with other methods designed to show the same thing. As you say, basically the benchmark criteria by which people rate tennis players include total slam wins, weeks at #1 etc.

The results of the study may not be tweaked - but the study itself is.

Key point here: by omitting players who are great, such as Laver because they didn't attain #1 the study is just silly. At worst the sample group should have covered everyone who won at least one slam.

I can come up with a more appropriate study in a couple of lines:

Chart all slam winners or people who've held the #1 spot's careers then apply this:

- Every match win earns 1 points

- Every win over someone ranked higher than them (at the time of the match) earns an extra 0.1 points

- Every win over someone ranked in the top 5 (at the time of the match) earns an extra 0.1 points*

- Every win over someone who has won a slam (anytime before said match) earns a player an extra 0.2 points

- If that person is also a current slam title holder a player earns a further 0.5 points - Every win over the current #1 earns the player an extra 0.5 points (*instead of the points you'd get for beating a top 5 player)

Done.

Last edited:

VGP

Legend

However, I listed numerous criteria in my response and you chose to pick out just one (which also is probably the least significant). My argument was an aggregate of a number of results... not simply total weeks at #1.

What about the declarations he stated and I quoted in post #80? I'll repeat them below.

- - -

Quotes from the author:

"The results presented here indicate once more that ranking techniques based on networks outperform traditional methods."

"The prestige score is in fact more accurate and has higher predictive power than well established ranking schemes adopted in professional tennis."

"Prestige rank represents only a novel method with a different spirit and may be used to corroborate the accuracy of other well established ranking techniques."

^^ Where is the author's evidence to bolster these statements?

I think he's trying to buck the aggregation method of trying to determine the best player. I didn't mean to pick on "weeks at #1" as a method of determining the best player. It's just weeks at #1 is based on the point system of rankings.

Redicchi's use of the algorithm seems to buck the conventional methods. It takes away ATP rankings, major title wins, etc. the "usual" criteria for determining the "better" player.

But what I do like is in what he wrote back to Timbo about all players starting off as equal and their "prestige" built over match wins over different players over time. In a way it weights wins AWAY from grand slam wins, but toward a win against an accomplished player at any time.

As for the statements pulled that you mention above, I think he's just trying to sell his argument. "Better" in this case is a matter of opinion and interpretation.

I see this method being good in the reasons I mentioned earlier.

- it is based on player-player interaction and not just points accrued over a season like the 52-week defense method in use now

- it seems to alleviate the weight placed on major titles for titles sake since it is possible to win a major without playing the best players (although a McEnroe/Connors USO final is pretty awesome - but their prestige is already built up and no matter where they meet it is a clash of the titans)

I agree that his exclusion of Laver is a glaring omission. One of which he wrote in his message to Timbo. That he'd be severely penalized since it was the end of his career. But like I said, the data's the data and he should have been included even if he'd rank out of the top 10.

I think it would have been better for him to limit his inclusion of players to those who turned pro in 1969 or later without any prior experience from the pre-open era. It would really define the success of players rooted purely in the open era.

I also like that he does go on to apply the algorithm to players of the decade and on surfaces in the supplemental information. Those results are another whole kettle of fish.

The results on a per-surface relationship really might add another wrinkle to the mix. In one way, Sampras would be (and is) considered a great player due to his major title total, but on the other hand, half of them came on grass, and all of them were non-clay. Perhaps that's why he ends up ranking low because his "prestige" total decreases due to his results on clay against other historical players. Nadal also ranks low because of his dominance on clay and losses to less accomplished players (as of the paper's writing) on non-clay surfaces and his career is only midway.

Connors was a multi-surface winner over a long period of time with wins over great players of several generations - regardless of ranking. This algorithm really does reward longevity.

VGP

Legend

Yes, especially when Nadal is second in winning % overall in open era and has a winning record against all main rivals including 14 wins over Fed.

Actually, I think that is why Nadal ranks lower. He doesn't build is prestige ranking by winning against a variety of good players, he does so against one.

And his winning percentage is heavily weighted to the clay court surface.

In looking at the results, it looks like players who truly dominate are penalized because there are fewer "great" players by which to gain prestige.

veroniquem

Bionic Poster

Against one? You're joking right? Rafa has a winning record vs Fed, Djoko and Murray. Who else has that?

On clay? Oh right: I guess his wins vs Djoko at W, USO and Olympics never happened. My bad.

(Rafa has beaten all 3 of them on grass and hard court and all 3 of them in non clay slams)

On clay? Oh right: I guess his wins vs Djoko at W, USO and Olympics never happened. My bad.

(Rafa has beaten all 3 of them on grass and hard court and all 3 of them in non clay slams)

Last edited:

TennisMaverick

Banned

I understand the difference. That question YOU asked was highly insulting to me. ADHD/ADD have nothing to do with learning disabilities; to claim that I cannot read because I have ADHD is demeaning and prejudiced because you may know one or two cases. I understand the difference better than you do, don't try running around it.

Obviously, you don't understand the difference between a question and a statement.

Secondly, my first comment after I started this thread, was not posed to YOU, I DID NOT "QUOTE" YOU, and it was in the form of a question. It is not insulting to ask a question, nor are any of those conditions a stigma. They can be a strength or a weakness, depending upon the environment. PERIOD.

Thirdly, to say that ADD/ADHD and LD's do have not relationship, is untrue, just like many may have asthma, hay fever, or eczema. There are relationships, although they can be mutually exclusive. You have no clue as to my experience or expertise regarding learning issues.

Fourthly, everyone but you, is talking about the study. If you don't like the thread which I started, then don't read or post on it.

Lastly, your problems are far greater than your inability to decipher the nuances of language. I posted this study because it was done by a bonafide scientist as a statistical study designed around specific mathematical parameters, based on fact, not opinion, and me cutting and pasting it into a thread comment window, does not make me gullible--your first statement which you tried to take back--especially since I have not expressed my opinion about it.

Bottom line; you drew first blood; don't cry when you get cut open. If you can give it, you better learn how to take it Sheila.

Last edited:

Cooper_Tecnifibre4

Professional

Timbo's hopeless slice

Hall of Fame

I find this thead interesting.

I'm surprised the author of the paper actually responded to Timbo's hopeless slice. I'm curious to know your background.

It's a bit of a biased view to report Filippo Radicchi's response to your emails without sharing the content of what you sent.

Hi VGP

I wrote a fairly detailed initial email querying the validity of the algorithm with specific reference to cases where the data did not appear to support the conclusion. (Rod Laver's open era GS and the relative positions and performance of Tom Okker and Rafael Nadal, for example)

While Mr Radicchi has been unfailingly polite, he seems impervious to the admission of any alternative view.

I am a University Lecturer and have played tennis all my life.

(the 'loose'/ 'lose'thing is a reference to a different thread, essentially an in-joke, for which I apologize to those unfamiliar with the discussion in question)

Last edited:

Tennisisgod

Banned

Some background: Tennis Open Era began April 28th, 1968. First Open Era GS tournament was the 1968 French Open.

- -

Eddie Dibbs: #18 on the list

Titles in OE: 22

GS Titles: never made it beyond two SF appearances

Highest Open Era ranking: #5

Versus top 10 in OE: 21/51 (29%)

Career winning % in OE: 584/252 (70%)

Rod Laver: didn't make the list

Titles in OE: 42

GS titles: 4 (won all majors in 1969)

Highest Open Era ranking: #3

Versus top 10 in OE: 8/20 (29%)

Career winning % in OE: 412/106 (80%)

Laver's numbers are either equal or greater in every single category. So, why didn't he make the list

This is the common sense part of the process the author of this study should have realized and then tweaked the model, accordingly.

I like this post a lot. Nice job, Mr. Algorithm

PCXL-Fan

Hall of Fame

The results on a per-surface relationship really might add another wrinkle to the mix. In one way, Sampras would be (and is) considered a great player due to his major title total, but on the other hand, half of them came on grass, and all of them were non-clay. Perhaps that's why he ends up ranking low because his "prestige" total decreases due to his results on clay against other historical players. Nadal also ranks low because of his dominance on clay and losses to less accomplished players (as of the paper's writing) on non-clay surfaces and his career is only midway.

VGP, even Sampras's grasscourt prestige doesn't rank in the top 5 using his system. Sampras is the 6th most prestigious on grass behind Connors (1st), Becker (2nd) and Federer (3rd).

There are so many reason not to use this system.... the decline in # of grasscourt tournaments over the years interferes with results as Connors and Newcombe (4th) played in an era with more grass tournies.

yeah...

Last edited:

Just to correct a few numbers on Laver. He won 5 open era majors (not 4). He won at least 54 open titles since 1968 (not 42), and some 15 more if one includes pro results since 1968. A 8/ 20 percentage against leading players is ridicilous. In his Grand Slam of majors in 1969 alone, he won 16 matches against top ten players or major winners. I only can assume, that the author took those numbers from 1974 onwards, when the computer ranking had been established (and Laver was 35-36 years old). And even then he had more wins over Ashe, Borg, Tanner, Smith, Kodes, Okker, Panatta, Ramirez and other top tenners of those years.

Bud

Bionic Poster

Hi VGP

I wrote a fairly detailed initial email querying the validity of the algorithm with specific reference to cases where the data did not appear to support the conclusion. (Rod Laver's open era GS and the relative positions and performance of Tom Okker and Rafael Nadal, for example)

While Mr Radicchi has been unfailingly polite, he seems impervious to the admission of any alternative view.

I am a University Lecturer and have played tennis all my life.

(the 'loose'/ 'lose'thing is a reference to a different thread, essentially an in-joke, for which I apologize to those unfamiliar with the discussion in question)

If you read his published study, he also incorrectly uses loose as opposed to lose. I find it difficult to believe it was seriously peer reviewed as claimed.

Similar threads

- Replies

- 24

- Views

- 2K

- Replies

- 17

- Views

- 2K

- Replies

- 19

- Views

- 3K